Week 15, 2025 - Kung Fu

This week on my Engineering Manager challenges series I wrote about handling an ambitious developer who announces they want to be an Engineering Manager too. I wrote about goals, risks, details to discover, and ways to support this engineer regardless of current organizational opportunities. Read it here if you missed it.

You’ll notice there’s a lot of MCP stuff in this issue. If you have no idea what’s Model Context Protocol, Gergely Orosz wrote a great deep-dive explaining the concept and showing some use cases, approaching it from the AI-enhanced IDE angle. I’m going at this from the other end: instead of extending an IDE like Cursor with MCPs, I connected MCPs to the desktop Claude chat app and experimented with it via the chat interface.

Claude using these MCPs felt like Neo in the scene from Matrix when he learned kung fu. This is more or less what’s going on behind the scenes, actually: triggered by certain prompts and word patterns, the LLM learns that it can connect to these new extensions and use data coming from them to influence its reply. These new skills of reading and editing files, querying a database, controlling a browser, or running commands are so natural like they have been part of Claude from day one.

📋 What I learned this week

After a week of experimenting, this is how I code now with Claude and two MCPs:

- Desktop Commander - Built on top of Filesystem Server, this tool allows Claude to read and write files, implement token-efficient text changes, execute terminal commands, and (since 3 days ago!), read files from URLs too.

- Browser MCP - Full control of a Chrome browser window by the LLM. I write more about this tool below. I usually connect an incognito window just for the sake of security, but giving access to a logged-in browser has its advantages too, being able to skip authentication and possible CAPTCHAs.

I found that following a slow, step-by-step, methodical approach is better than jumping right into vibe coding, so as a first step, I collaborated with Claude on a high-level description of the MVP of the app I have in mind. Both functionally and technically, including things like working in Node and React, using Test-Driven Development, deploying with Docker Compose, and following SOLID principles where applicable. Once we’re at a good stage, I asked it to write these requirements out to a project_description.md file.

Next, I prompted it to create a simple hello world application that I can use to fine-tune hot reload and deployment. I simply asked Claude to create the boilerplate environment based on the project description file, including adding a README.md that describes installation in production and development environments. After a few back-and-forth bug fixes, we had a working hello world app.

Finally, in a new chat, I asked Claude to examine thoroughly all the files in the project’s folder, study the architecture of this web app, and note its learnings in a Claude.md file that can be used in later sessions to get up to speed quickly.

It’s important to keep these .md files because every step is a new chat that starts with reading what we made so far, to avoid using up the context window too fast.

At this stage, I could give a prompt to create our implementation plan:

We have a boilerplate application framework in our default directory. Study thoroughly the Claude.md and README.md files that describe what we have already. Once you have a comprehensive understanding of the context, let's have a plan to implement the application in project_description.md. This plan should be a list of sequential steps, each reaching a testable, self-contained, shippable milestone. Steps are building upon each other. Get granular and include all small steps.

Create this list of implementation steps in IMPLEMENTATION_PLAN.md, listing them one by one starting with [ ], so as we develop, we can check them ([x]). Every step should be worded as a verifiable, completed action, for example "Database is set up with dummy data" or "Contacts are listed on main page in a table", etc. Steps can have substeps.

As a doublecheck, after this, I started a new chat to verify:

We have a boilerplate application framework in our default directory. Study thoroughly the CLAUDE.md, README.md files that describe what we have. Once you have a comprehensive understanding of the context, check IMPLEMENTATION_PLAN.md, and validate that these steps are correct, complete, necessary, and pragmatic actions to build this app, resulting in a sustainable, further developable webapp.

Finally, after all this, I could say in a new chat:

Let's implement Phase 1.

And watch as Claude creates the first failing tests, then after confirming everything is OK, implements the code to satisfy them by running the test cases it created. Without any interaction from me. Pretty cool.

Of course, this is not a silver bullet approach; I, more than once, had to intervene because the development took a turn I didn’t like. Also, right before the first step, I checked everything in git so I could go back to previously working stages, and just re-start a new chat session with a more prescriptive approach.

This process is very similar to Claude Code (which I tested a few weeks ago), but much cheaper, because everything is within the $20 Pro subscription — if you’re patient enough to wait another day once you use up your quota.

🤔 Articles that made me think

Setting targets for developer productivity metrics

This is a recording of DX’s recent webinar covering the topic of engineering performance. Here are the thoughts I noted for myself:

- Separate your metrics into Controllable Input Metrics that are actionable within the team’s area of control (eg: code review turnaround time); and Output Metrics, high-level trends captured over time (eg: PR throughput). Once done, connect which Input Metric impacts which Output one, and if you want to improve the latter, experiment with actions targeting the former.

- These metrics can be imagined as a pyramid, where the foundations are the Controllable Input Metrics, which roll up to Output Metrics, which, if necessary, can roll up to a more abstract composite index for high-level reporting. (eg: “CEO wants one number to describe engineering performance.”)

- If you want to avoid people gaming the system, choose multidimensional metrics, target inputs (see above), celebrate efforts and improvements (vs. hitting pre-determined numbers), and give teams time to do the work. In general, be inviting to the process; don’t just drop numbers as goals.

- The goal is to improve the whole system, not individual performance.

Shopify’s Internal AI Memo

This is how it looks like when a company tries to fully embrace AI. Individuals are expected to learn and use AI tools efficiently, as far as this being part of their performance evaluation. Teams need to justify why they need additional headcount by proving they can’t solve what they want to do with AI. Prototyping should be dominated by AI use.

While sounding dystopian, I think there’s an angle where this looks better: the message is about learning to use new tools, a strong expectation to experiment and learn during work hours. Sweet! This also sends a reassuring message to AI-hungry investors that they are betting on the right company with Shopify. Sure, there’s a huge hype aspect, but it doesn’t hurt to please the owners of a company with a 50+ P/E ratio in today’s stock market.

At gaming companies, however, pushing AI into creative artistic jobs sounds like a trainwreck.

🖥️ Something cool: Browser MCP

Give your MCP-compatible AI tool a browser to control, and ask it to do tasks like researching the web, filling out forms, and analyzing websites. I played with prompts like:

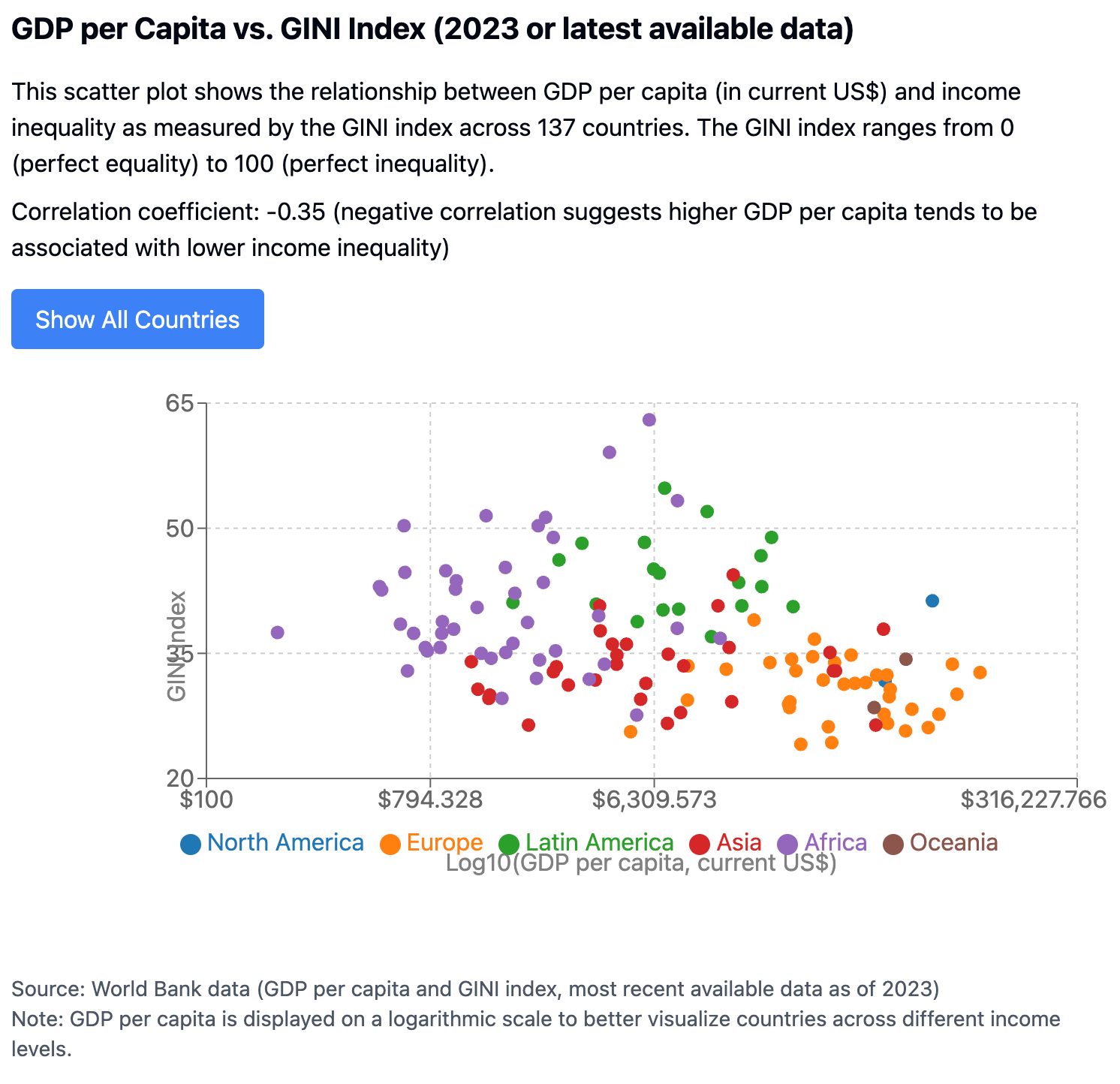

Check this website (<http://localhost:8000>) for common XSS and similar attack vectors.→ This was a basic web app I was working on, and Claude tried simple XSS and SQL Injection tricks. It didn’t find any issues, but it was fun to watch!Are there direct flights between Lyon and Budapest, and if so, how much does it cost to go in May?→ First, it missed the only airline connecting these two cities now, but after a little nudge, it could find relevant prices. Still, I’d never use this instead of dedicated sites…Explore and summarize https://peterszasz.com→ After loading the site, it navigated to the About page and found enough info to give a decent summary.Create a scatter graph of GDP/capita vs GINI index around the world. Search the web for the latest data.

This last one worked for quite a bit but eventually created an interactive React artifact - check it below or play with it yourself here.

This is far from a dedicated research assistant in its current form: I don’t 100% trust that it’s not hallucinating data or making other mistakes, and the operation is quite slow. Still, pretty mind-blowing to reach this result from a simple prompt.

That’s it for this week, catch the sun during the weekend,

Péter