Week 37, 2024 - Sick Data

In 2002, a friend of mine used to work in CERN on a cool project: writing the software stack for a new generation of network cards that could transfer an unprecedented amount of data in a very short time. You know, all the stuff you want to record when you smash subatomic particles. They needed to ensure the software layer could store everything when the data floodgates open. It’s frustrating that I don’t remember the actual numbers anymore, but one thing stuck with me: when I asked how the hell is this network infrastructure, supposedly magnitudes faster than anything available today, even work, then he just laughed saying something like “We don’t know yet, the hardware team has two years to figure it out.”

This is classic waterfall, probably the opposite of what you need during wartime software development, and a good example that every project and every organization has its unique needs. You can’t just blindly adopt the latest trendy methodology without first understanding your specific context and the exact problem you're trying to solve.

Also, if you’re near Geneva, take a day trip to the CERN, they have a cool visitor center.

I chose this photo for today’s newsletter to illustrate all the data moving and finetuning stuff I did on my homelab. But to be honest, I spent most of the week in bed with flu (or something similar). If you have kids at home, you are probably familiar with back-to-school sickness. I’m mostly recovered now and everyone else is OK, but what I could accomplish this week was severely limited both in depth and significance.

📋 What I learned this week

After a long summer break, we’ve launched the next episode of our podcast with Jeremy, The Retrospective. We’re discussing my Interviewing article, focusing on Engineering Managers applying for a new job. The publishing was an interesting learning experience for me, it was the first time I took this task from Jeremy. I learned that Descript is a professional editing tool that allows you to modify a video by working on the text transcription of it — pretty cool.

You can find the episode on Spotify, YouTube, or in your usual podcast app by searching for The Retrospective. We’re working on getting back to a more regular schedule.

Progressing with my photo hosting project, I installed Immich in a separate LXC. I was slightly worried because of the bug warning on their front page, but so far the experience is top-notch. Super mature product. That being said, processing a half-terabyte external library of 125.000 files takes a long time on a 13-year-old computer, especially because said files are on a USB2 drive. I didn’t even turn on video transcoding, the thumbnail generation alone took more than a day.

For face recognition, I wanted to use my laptop, and Immich’s microservices architecture supports this setup out of the box. Remote machine learning is blindingly fast - like a magnitude or two quicker. Understandably, the shared CPU of a 2011 Mac Mini vs. most of the dedicated power of a 2021 MacBook Pro is not comparable. The setup was super straightforward and took only minutes. After experimenting with various settings, I found that running six concurrent Face Detection processes allowed me to use my laptop without a noticeable impact on performance.

I thought I had a well-organized photo library, but still wanted to be sure there were no duplicates. I found czkawka, an amazing app for finding similar photos. Focusing on just identical files, it found 1441 duplicates taking almost 10GB of space. Not bad!

Finally, after the initial import, I realized a lot of my old photos don’t have EXIF dates, so they are imported with a recent date, messing with my timeline in Immich. Analog scans, post-processed images, family photos received from others, old videos, etc. I wanted to fix this at the source (Immich doesn’t have write rights on my files for safety reasons), adding missing EXIF data where it’s needed. Instead of looking for a ready solution (of which there are plenty I’m sure), I ran another experiment to see how far I could go developing a script without any actual coding, only collaborating with LLMs.

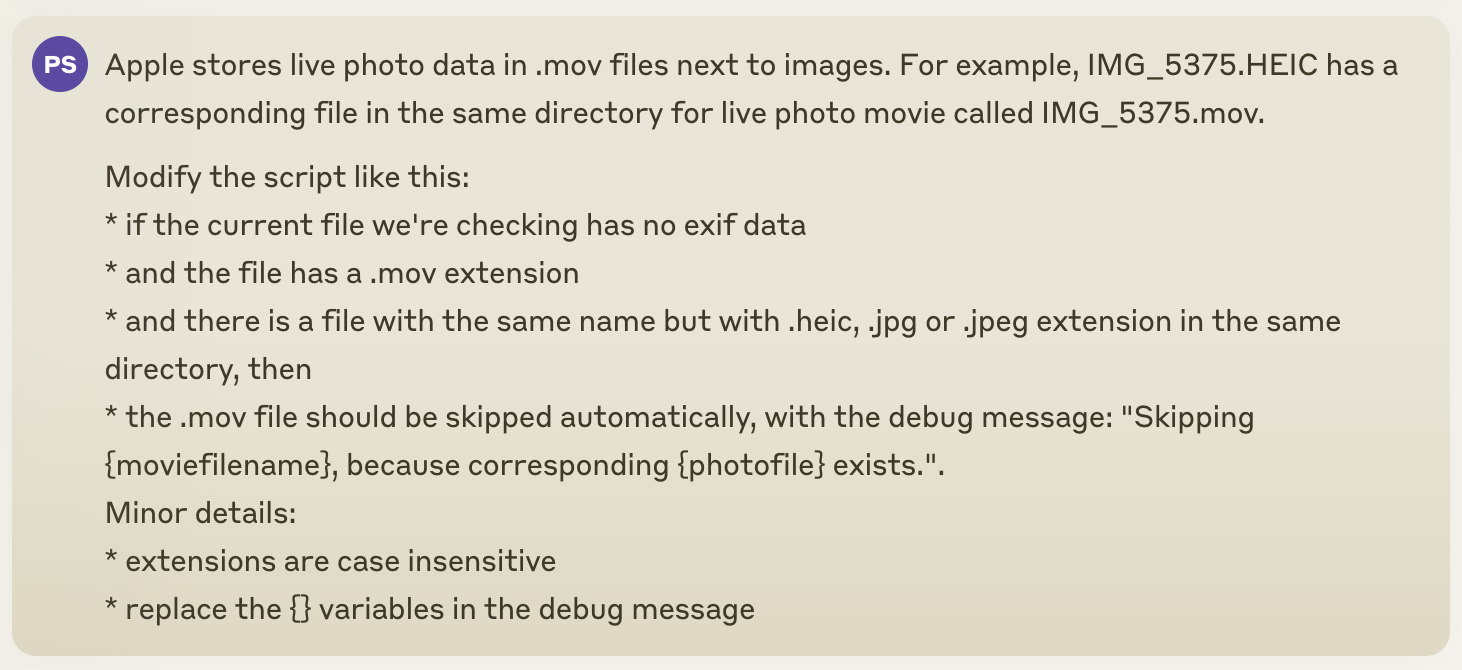

The Artifact-based workflow of Claude AI was a good start for this task, though in hindsight I should’ve probably used Cursor instead. I wanted to create an interactive command line script that asks and sets the EXIF date if a file lacks one. I wanted various usability improvements, like being able to only write a partial date, use the same value multiple times, not having to deal with live photo videos, etc. I screenshotted one of the more complex prompts I was using during our collaboration:

This level of precision shapes the resulting algorithm to what I had in mind - which can be a blessing or curse, though most of the time I found that I need to hold the LLM back from overengineering things.

If you’re curious, check the final script (I chose JavaScript because I’m more familiar with it than shell scripting, so I could verify the results and help Claude fix problems). There are a bunch of things I hate in it (returning a string or a boolean depending on the result makes the hair stand on my arms for example), but it works well and was magnitudes faster to create, modify, and maintain in the future than writing alone from scratch.

🎯 What I want to try next week

I want to launch the second part of our podcast episode about interviewing. We have a few recordings in the pipeline ready to edit and publish, and then we’re on to Season 2. Exciting!

In the spirit of practicing finishing, I want to timebox all my photo-hosting activities and improvements to be finished by the end of next week. Acceptance criteria: all files should be de-duplicated, EXIF-tagged, and backed up offsite.

🤔 Articles that made me think

Zero to launch app development in 2 weeks with AI

Detailed report and a bunch of tips from the journey of a simple app’s ideation, coding, illustration, app store submission, and launch; by someone with only 3 weeks of (similarly AI-assisted) web development experience. Sure, the result is nothing complex, but it’s still mindblowing, especially the two-week part. (If you ever worked with Apple on store submissions, you know what I mean.) The empowerment of non-technical people to develop software is happening faster than I thought.

Asking the Wrong Questions

Benedict Evans explains a realization with entertaining examples from the past: predictions are mostly wrong because they have an implicit context of the present, a context that they are extrapolating from, ignoring that these contexts are as fluid as the technology within them. This can be applied to engineering leadership too, especially now, when some of you are probably busy adjusting Q4 roadmaps, and started to think about 2025 too. Step back and try to look at the whole context: Are you planning to fix something that you could just migrate away from? When was the last time you compared on-prem hosting and AWS? That ambitious project you started last year preparing for the then-expected user needs, is it still relevant? How old is your latest user study you’re extrapolating from? Spend some time making sure you’re asking the right questions, and the quality of your answers will be magnitudes better.

🤖 Something cool: ChatGPT o1

Of course, it’s the new kid on the block that I wanted to talk about here, even though I haven't had the opportunity to test it firsthand yet. For those of you who are not following the news, OpenAI released o1-preview and o1-mini, the first two LLMs that are optimized for reasoning and problem-solving, to address the failure of current LLMs in similar tasks. This means extended "thinking" time, and I can only speculate about the resource demands, given the draconian usage limitations (30 messages per week(!) for Plus and Team users as of today).

The results seem impressive, check r/singularity or r/chatgpt for some cool examples. I want to cite one because it’s close to me: every morning I play New York Times crosswords and games to boot up my brain, and amongst these is a more recent one called Connections, a simple game where you need to arrange 16 words into groups of four by finding the common threads between them.

Well, ChatGPT o1 solved a recent Connections game in 21 seconds. This is a bigger deal than it might seem: these puzzles are skillfully constructed to mislead you. It’s a typical experience that I do find a connection between four words, but they will have to end up in different groups because otherwise there would be no solution for the remainder of the puzzle.

The fact that a "glorified autocomplete" can navigate through these logical minefields is mindblowing.

By the way, did you know where the name of these new reasoning models, Strawberry is coming from? If not, ask your favorite LLM to count how many letter R’s are in the word “strawberry”, and try to convince it’s not two but three. The amount of gaslighting it’s trying with you is ridiculous. Great job OpenAI for the elegant reaction to this Reddit meme.

That’s it for today, avoid the viruses this weekend,

Péter