Week 19, 2025 - Memory and Skill

It was 1995, springtime like now, and I was sitting on one of my first university exams. The subject was UNIX shell scripting, and our task was to write some code using grep/awk/sed to manipulate text files. We had an hour or so to complete the script, and we had to work on paper. Yes, we were not allowed to use a computer. The argument was that if we had access, we could figure out the solution with the combination of man pages and trial-and-error. They said they want to verify what we learned, not what we could do.

During the 2010s, when I was leading developer teams, our technical interviews usually asked the candidate to debug some code or implement a new function. We provided them with a simple text editor only. We argued that if they had access to an IDE or Google, they could figure out the solution with the combination of syntax highlighting, code completion, and Stack Overflow. We said we want to verify what they know, not what they can do.

Today, most companies do everything to prevent candidates from using LLMs in their technical interview processes. They go as far as abandoning remote interviews and bring back in-office ones. They argue that if candidates had access to AI, they could figure out the solutions with clever prompting and smart agents. They say they want to verify what the candidates know, not what they can do.

Nobody was writing programs on paper by 1995. Using sophisticated IDEs and Stack Overflow was prevalent during the 2010s. Plenty of software development teams do LLM-assisted coding now.

Why are we testing if candidates memorized something by heart when the work we ask them to do is about the skillful use of the latest tools available?

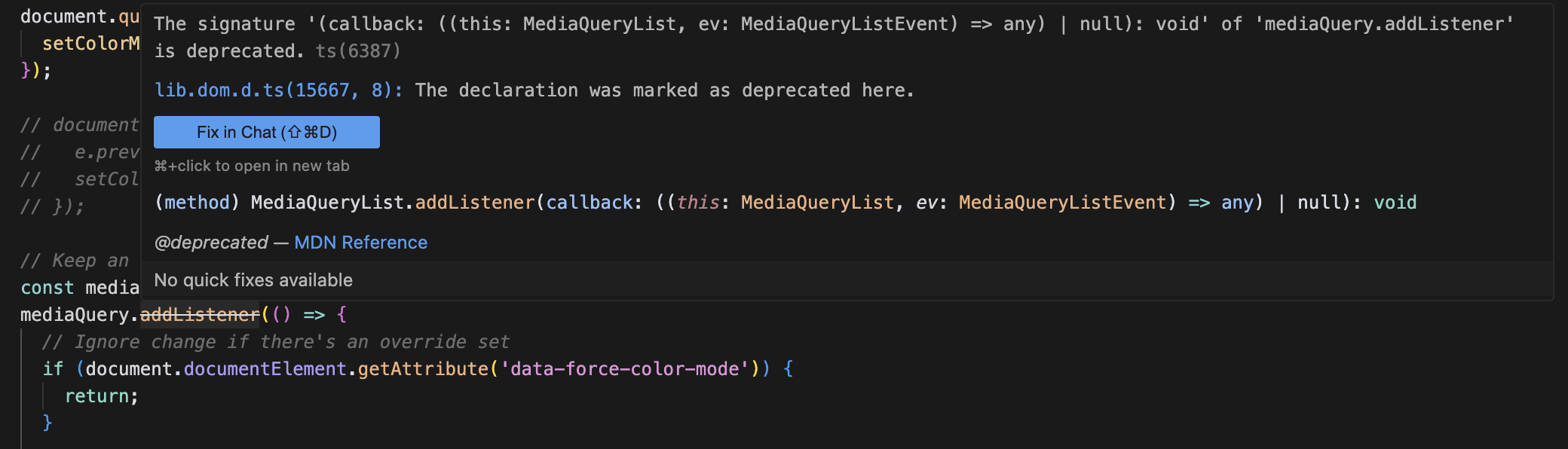

I had the above chain of thought after fixing dark mode on my blog yesterday. I opened the code in Cursor, and it was pretty obvious what was wrong:

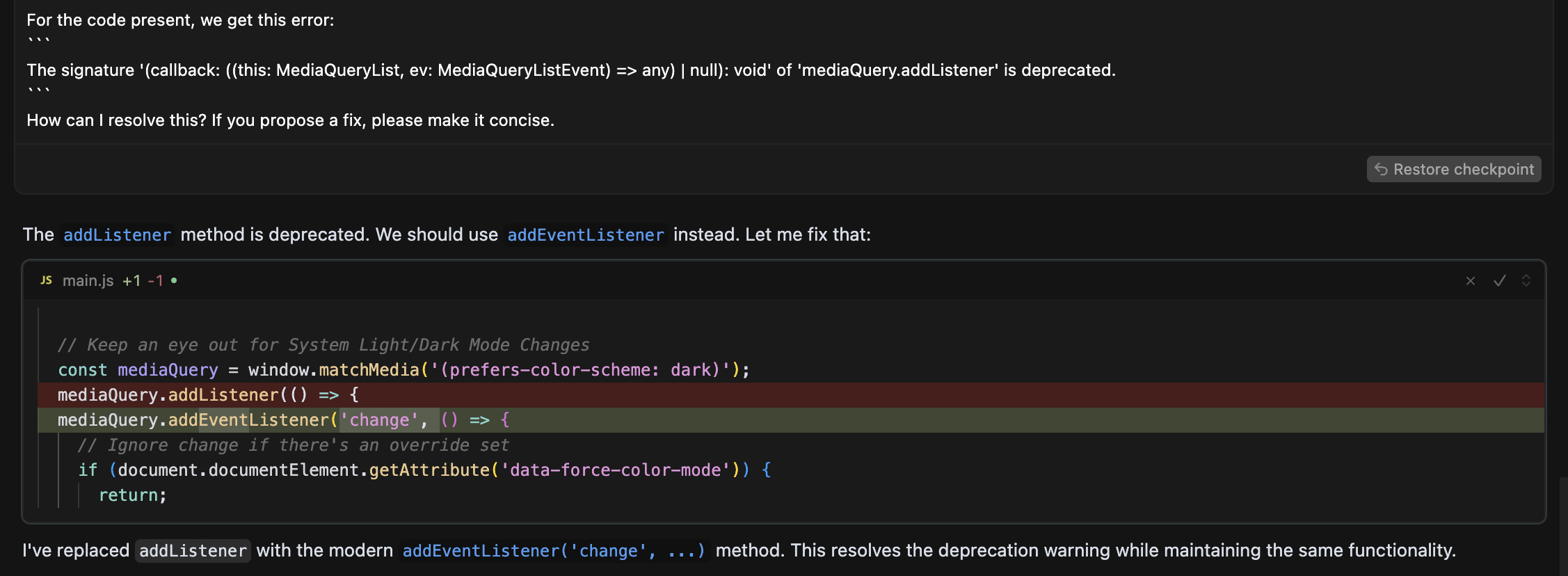

I just clicked on Fix in Chat, it even wrote the prompt for me, executed it, and proposed a diff to solve the issue:

That’s it. Two clicks: Fix, Accept.

Should we fail someone on a technical interview for not being able to fix this?

Does it matter how the method is called if they are familiar with the concept of event listeners?

Who’s a better developer, the one who follows every specification change of a language (and stays up-to-date on how and when browsers implement these changes), or the one who gets the job done?

I guess the answer, like with most things, is: it depends.

Which gives me a nice segue to the Engineering Management challenge of the week: Who to hire? A Specialist or a Generalist? I listed some aspects to consider here.

🤔 Articles that made me think

Ads Soon on ChatGPT

Google was a clean tool with a universally understood offering. It searched webpages and showed ads next to results. ChatGPT is, increasingly, an aide, assistant, partner, confidant. People ask for advice, share their thoughts, feelings. Using (abusing?) this relationship to influence purchase decisions will have a giant impact on online advertising.

Or!

Imagine two ChatGPT subscription tiers: $20 a month for unbiased access, or free unlimited use, but sales pitches might appear in some discussions.

You’ve been struggling with this MacOS gaming performance issue for a while, why don’t you just give up and buy the new PlayStation? I found a 10% coupon that expires soon, just click here to use this deal now, and I’ll have it already applied to the price for you!

Which one would you choose?

I'd Rather Read the Prompt

An enthusiastic plea for stopping AI use in writing. There are a lot of arguments I agree with here. The one I resonated with most was that the actual point of writing is to communicate original thoughts. LLMs are, by definition, not original; they were trained on existing material and are forced to come up with the most likely continuation of a discussion.

However, the author only compared the extremes: either someone writes everything on their own, or prompts a language model and copy-pastes the output. There’s a huge spectrum of various levels of LLM use in between! Author and AI can collaborate on a text, the former keeping the control all the time, debating with, getting inspired from, and editing the LLM’s outputs.

The article has the catchy title “I’d rather read the prompt”, but there is no single prompt; it’s a discussion.

That’s it for today, sharpen your axes this weekend,

Péter